In the third article of this series, Keir Regan-Alexander looks at ‘Hybrid Collage’ and how it’s opening up new possibilities in terms of concept design and image creation

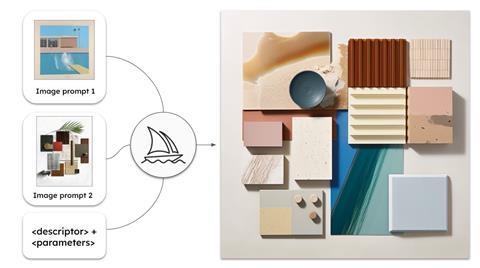

The term ‘ideation’ always sounds made up to me, but essentially this is what these image generation platforms aim to do. “Hybrid Collage” is a term I have started to think about as describing the process of image creation using diffusion models, like Midjourney using a novel recipe of image, sketch and text prompts. It’s perhaps most directly applicable to RIBA Plan of Work stage 2 / AIA SD.

The process is akin to pinning up all the inspiration, precedent studies and icons for a project in one place above your drawing board and trying to hold them in your mind all at once while you sketch. Each is an ingredient to be plucked from the shelf and combined in new ways. The outcomes of such experiments are highly unpredictable, but that’s what makes them fun.

When collaging ideas using diffusion models you will go down many blind alleys, but gradually you form a firm opinion about which path you wish to take and start to guide the model into an area of focus by honing your prompts. This is done by casting a wide net initially and seeing the range of responses the model sends back to you.

Once you see an option that is very close to what’s in your head, you push the re-use of the same “seed code” to keep new versions closely within the same line of enquiry and this allows you to maintain consistent results that you can manage the evolution of. Further control is achieved by using negative prompting to refine the idea through the exclusion of unwanted elements.

In this method you provide the model with ideas in the form of text, image and/or hand sketch inputs and let it go to work and it will present back a range of possible options to develop further. When you find a path you like, you take it until the concept feels like it has reached a conclusion. Images can then be blended to remix the same visual concept to a new composition, or even used to train your own bespoke model with.

Initially the aesthetic potential of these tools appeared boundless and experimentation was enthusiastic but slightly random. But very engaged designers are becoming more sophisticated in their direction by combining wireframe, sketch, render, image, text; meaning they are able to exert ever more control over the final outcomes. Midjourney will react very effectively to an architectural sketch as an image prompt, understanding the overall nature of the composition.

Designer Tim Fu is an early proponent of using AI Diffusion models with sketches for design work and has deployed Midjourney to great effect. His recent work “House of Future Fluidity” is less dreamlike than most of the architectural outputs from Midjourney but is definitely starting to look at home within the parametric canon and feels within touching distance of being a buildable design concept.

Midjourney’s main competitor, Stable Diffusion produces images that are powerful, albeit slightly less alluring. However because they are an open-source platform there is a growing community of software developers building tools and models to enhance experimentation. This has allowed the use of ‘ControlNets’ and ‘In-painting’, two incredibly powerful features that give architects the ability to go straight from a hand drawn sketch to a render in real-world context, in less than an hour.

Be Careful of:

A. IP infringement considerations. This is relevant to both the dataset upon which a diffusion model is trained and upon the image prompts you decide to add to your idea input in order to generate your new imagery.

For example, Adobe’s Firefly is trained on Adobe stock imagery and so licencing of any images you produce isn’t an issue provided you are paying Adobe for a licence. There is scant case law around this new phenomenon, but if you are commercialising image use and making money (in the form of fees) from utilising such models, technically you may be at risk of an infringement.

Incidentally, you can see the most popular training set of 5.8 billion images used by these AI models at HaveIBeenTrained.com and if you would like your images to be removed from the library that can be done upon request. A key thing to note here is that it’s assumed that the imagery is included by default, you have to take the active step of asking to have images removed or delisted from the training data to avoid being sampled.

In the event that a claim were made, unscrambling exactly what proportion of an invention was derived directly from a particular source would be incredibly difficult and while a work might appear derivative, the percentage weighting of a single source image or design may well prove to be vanishingly small in practice - the courts will have to prove this out.

B. Addictive use. There is a ‘slot machine’ quality to these apps and you will end up wasting time if you aren’t focused; like infinite scrolling on social media, it can become an unproductive and ultimately fruitless dopamine binge - it also goes to work incredibly fast, giving potentially engaging results within minutes.

By contrast traditional design work has always taken a great deal of deliberation, effort and time to garner results – it has required endurance and patience. There is an emergent potential for apps like Midjourney to do to architectural design what TikTok has done to long form video content.

C. Believing it’s all great. Platforms like Midjourney create very alluring imagery with great ease, but when striking imagery is abundant, this calls for a greater level of judgement from the designer. Be careful you aren’t too easily seduced by the first thing that catches your eye.

>> Also read: The first movers creating Generative Design & AI tools for architecture

>> Also read: Generative Design & AI Trends: ‘Auto Optioneering’

>> Also read: Generative Design & AI Trends: ‘Leveraged Drafting’

Postscript

Keir Regan-Alexander is an AEC Domain Expert and Consulting Director operating at the intersection of architecture practice, sustainable development and software design. Connect on LinkedIn

No comments yet